When we talk about the dangers posed by artificial intelligence, the emphasis is usually on the unintended side effects. We worry that we might accidentally create a super-intelligent AI and forget to program it with a conscience; or that we’ll deploy criminal sentencing algorithms that have soaked up the racist biases of their training data.

What about the people who actively want to use AI for immoral, criminal, or malicious purposes? Aren’t they more likely to cause trouble — and sooner? The answer is yes, according to more than two dozen experts from institutes including the Future of Humanity Institute, the Centre for the Study of Existential Risk, and the Elon Musk-backed non-profit OpenAI. Very much yes.

In a report published today titled “The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation,” these academics and researchers lay out some of the ways AI might be used to sting us in the next five years, and what we can do to stop it. Because while AI can enable some pretty nasty new attacks, the paper’s co-author, Miles Brundage of the Future of Humanity Institute, says, we certainly shouldn’t panic or abandon hope.

“I like to take the optimistic framing, which is that we could do more,” says Brundage. “The point here is not to paint a doom-and-gloom picture — there are many defenses that can be developed and there’s much for us to learn. I don’t think it’s hopeless at all, but I do see this paper as a call to action.”

The report is expansive, but focuses on a few key ways AI is going to exacerbate threats for both digital and physical security systems, as well as create completely new dangers. It also makes five recommendations on how to combat these problems — including getting AI engineers to be more upfront about the possible malicious uses of their research; and starting new dialogues between policymakers and academics so that governments and law enforcement aren’t caught unawares.

Let’s start with potential threats, though: one of the most important of these is that AI will dramatically lower the cost of certain attacks by allowing bad actors to automate tasks that previously required human labor.

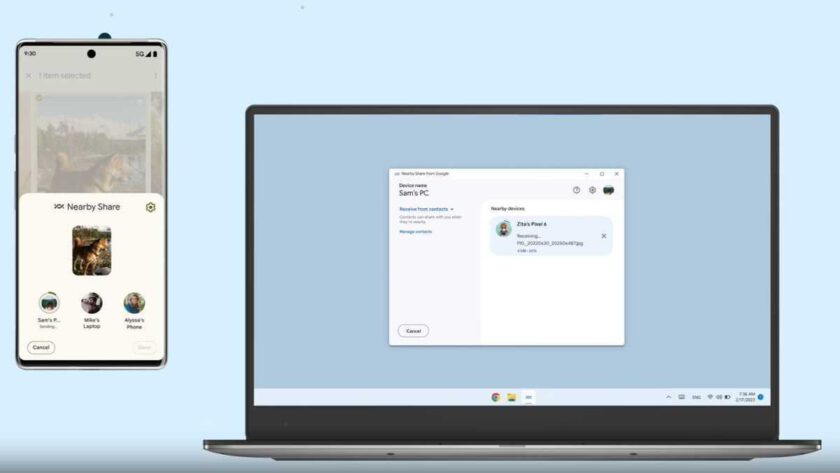

Take, for example, spear phishing, in which individuals are sent messages specially designed to trick them into giving up their security credentials. (Think: a fake email from your bank or from what appears to be an old acquaintance.) AI could automate much of the work here, mapping out an individuals’ social and professional network, and then generating the messages. There’s a lot of effort going into creating realistic and engaging chatbots right now, and that same work could be used to create a chatbot that poses as your best friend who suddenly, for some reason, really wants to know your email password.

This sort of attack sounds complex, but the point is that once you’ve built the software to do it all, you can use it again and again at no extra cost. Phishing emails are already harmful enough — they were responsible for both the iCloud leak of celebrity’s pictures in 2014, as well as the hack of private emails from Hillary Clinton’s campaign chairman John Podesta. The latter not only had an influence on the 2016 US presidential election, it also fed a range of conspiracy theories like Pizzagate, which nearly got people killed. Think about what an automated AI spear-phisher could do to tech-illiterate government officials.

The second big point raised in the report is that AI will add new dimensions to existing threats. With the same spear phishing example, AI could be used to not only generate emails and text messages, but also fake audio and video. We’ve already seen how AI can be used to mimic a target’s voice after studying just a few minutes of recorded speech, and how it can turn footage of people speaking into puppets. The report is focused on threats coming up in the next five years, and these are fast becoming issues.

And, of course, there is a whole range of other unsavory practices that AI could exacerbate. Political manipulation and propaganda for a start (again, areas where fake video and audio could be a huge problem), but also surveillance, especially when used to target minorities. The prime example of this has been in China, where facial recognition and people-tracking cameras have turned one border region, home to the largely Muslim Uighur minority, into a “total surveillance state.”

READ MORE ON>>The Verge